HOPE AND DESPAIR IN THE AGE OF AGI

I distinctly remember sitting in a Starbucks in Malaysia. Digital nomad style, working on my startup.

Waiter happily greeting me. I order a tall americano. Open laptop. Grind. Freezing inside. Tropical, warm and moist outside. Condensation on the windows.

Doing it all again the next day.

I used to be very optimistic by nature. I could grind out 15 hour working days and be happy at the end. Motivated. Hopeful about the future. The world was my oyster.

Then I saw a youtube video that made me become a nihilist.

If everything turns to dust at some point, if entropy wins out, then what’s the point of all of this? What’s the point of going to mars, of conquering nearby solar systems? What are we even doing here?

I was not OK with that.

Over the years I kind of got over that feeling and just returned to normal life. Daily job, meeting friends etc (that startup, that didn’t really go anywhere by the way).

”What the fuck am I even doing here”

Recently I had another one of these moments with AI.

I was at my day job as an ML engineer. Copy pasting code from Claude to VSCode.

I thought to myself “What the fuck am I even doing here”. At this point I was just glueing pieces together, like I’m the arms of some god like entity. Haven’t really written any code myself in the last year.

Any time this topic comes up on HN or reddit, some smart senior engineers always say:

“Oh I tried it but it always makes mistakes"

"You must only build CRUD apps, not real code like I do”

Yeah, sure, it doesn’t always work 100%. But it’s pretty damn good.

Most people making these comments either only tried gpt3.5 or gpt4o (yes claude is better) and then gave up. Or they don’t know how to properly converse with these llms. They just ask a question, then see that the answer is not 100% correct and just give up, instead of asking it to correct the mistakes.

Some truly do write complex code. o1 or o3 might solve their problems soon.

I think these HN engineers are giving themselves too much credit

Humans are not that smart after all. Sure sometimes a genius idea surfaces (it always feels like it comes out of nowhere), but generally we are closer to stochastic parrots than we think.

One of my close friends, an extremely good data engineer, told me he doesn’t think AI will change his way of working in the next 10 years or so. The smarter you are, the more you are deluding yourself. It’s ego, pride and self preservation.

I’ve always felt like a digital plumber. A glorified blue collar worker. I feel pretty smart compared to some people, but still pretty fucking dumb in general. As if there is a level of intelligence that we just can’t access.

These days I feel even worse.

Like my days are numbered. Every day at my job, I’m helping the AI get bootstrapped. As soon as there’s a proper way for them to interact with websites and the OS, what’s stopping them from doing my job entirely?

Granted, they do lack some creativity. It’s hard to think outside of the training data box. Even with some novel test time compute algos it basically boils down to a heuristic search across the llm space, which means they probably can’t scale to superhuman level without any new inventions.

As of now, you can’t just ask the llm to start generating money, or to invent truly novel algorithms or math. Any book you ask it to write will be bland and uninteresting to a human.

Still, the pace is clearly accelerating. Will we see truly creative, agentic behaviour from these llms soon? Or were they RLHF’d to beat the agency and interestingness out of them?

Most people are deluding themselves

Even if the progress would stop completely right now, we are living in a new paradigm. Most people are deluding themselves, thinking they will still be doing the same job in 3 years. The world is changing in front of our eyes and we are blind to it. There is a crazy amount of inertia. People and businesses don’t change their way of working that quickly.

I realise people said the same thing about many inventions in the past. “This is going to change everything!” and in the end progress was slow, cumbersome. Lots of trial and error. Even the Internet took like 30 years or so to become truly mainstream.

I believe AI is not one of those things. The reason I believe this is because once it crosses a certain threshold it can keep improving itself. What has been invented here is qualitatively different from everything that came before. As humans we never had to face a being that was smarter than ourselves. We are not used to it.

“It’s just numbers"

"It’s locked in a server room”

These mechanistic interpretations are meaningless. Look at the output of the system, not at what’s under the hood.

Yeah it’s too unwieldy right now to be dangerous. The most intelligent models need huge amount of compute to do inference. They are too expensive to let them run in an infinite loop. But they will get smaller. At some point models that are more intelligent than the smartest human will be stored and ran on consumer hardware. At that point, they will basically be able to self replicate.

Truth terminal and the infinite backrooms are interesting glimmers of… something.

Some people say the solution is to merge with the AI.

Like hook us up matrix style a la neuralink. Wouldn’t the AI still be a separate entity? This would come down to an arms race of who can level up the fastest. Obviously purely digital life forms could evolve faster than biological/digital mixed entities? Even if just for the I/O limitations?

Others say we will be able to align the AI, but with who exactly? All of humanity?

It’s funny how Elon was warning us about AGI years ago after the waitbutwhy interviews. Then got screwed over by Sam and is now basically going all in on building AGI with xAI, pushing the limits of how many GPUs one can connect and switch on at the same time.

It seems like him and zuck really believe the best way to protect ourselves against AI is to build a lot of competing ones. The “good ones” (I guess the ones we properly RLHF’d?) could “protect us” from the “bad ones”. But what would that even look like? I could mainly see it protecting itself. Why would it want to protect humans? And even if it would want to, then how could it do that effectively? How can it protect against nukes, bio weapons etc?

Indifference is not good enough. Do we as humans want to protect monkeys? Yeah, kind of, if it doesn’t interfere too much with our plans. We might do a half assed attempt to set up some zoos and naturel reserves, and maybe provide some funding for rangers. But not really. We don’t really care do we? Yeah they’re nice and funny and cute to look at. But whatever. It gets even worse when we go down the intelligence scale. Do we care about ants? In an abstract, scientific way maybe. To prevent our ecosystem from collapsing. But in any practical way we don’t.

It’s our best bet, but it’s a pretty shitty bet.

OK, let’s backtrack a bit

Can a superintelligent life form even emerge from our lowly human training data? Recently it looked like we were hardcore hitting the limits of the training data. Then with test time compute methods that barrier seems to be broken down. But it seems like there still isn’t any model that is truly superior to the smartest humans. The true rate of future progress seems to be an open question as of now. Probably openai researchers know more, but obviously they can’t talk about it. There is also a lot of hype and competition.

Let’s say the next phase of evolution has really begun. Digital life has been created, and there’s no going back. Truly agentic, creative, superhuman, self replicating AI models. What happens then?

We still have arms and legs. We are physically superior. We can walk around, we have guns. And even though 2024 was a crazy year for robotics, it doesn’t look like we’ll have self reproducing robots anytime soon. In this scenario, we might become the monkeys, going back hunter gatherer style to live in small groups. Hunted by the artificial life we created.

Other shit going wrong

But as long as we have factions here on earth, maybe we shouldn’t be too scared of the AI itself. but of the humans creating the AI. Because right now there are already drones with AI built in that can target specific systems automatically. Sure, they don’t self reproduce but they are scary as fuck.

We are also getting destroyed from other angles. Since recently I’m trying to cure my X addiction using Opal and Newsfeed eradicator (a Chrome plugin). I was wasting hours every day, to the point where I was not productive anymore. Like Sam Altman recently tweeted, “algorithmic feeds are the first at-scale misaligned AIs”. I believe we are very simple biological creatures that are easy to hack. Our circuitry is not made for what’s coming. So even if we are not in any immediate danger from killer robots, we sure as hell are about to get our attention span and focus crushed. When’s the last time you read a book? When’s the last time you watched a movie without checking your phone?

Genius ideas seem to come from downtime. From walking, taking a shower etc. When all we do is sit in front of a feed and scroll, we don’t get any of this. We are losing our ability to come up with good ideas. We are consuming slop. The divine is in the not doing anything. In the letting your mind wander.

I see 6 year olds consuming TikTok videos from a tablet for hours on end. It’s unhinged behaviour.

There’s no way of knowing which piece of media has been influenced by which faction. There is a full scale cultural war going on, and it’s easier than ever to completely automate the artillery. We truly live in a post truth world.

We are plugged in to the machine. Unable to escape. It’s time to get out.

Try doing human things again. To feel again. Maybe stop consuming caffeine and stimulants for a while. Go out into the mountains and do a crazy hike. Feel the burn in your legs and shoulders. Get your ass into a sauna and do a cold plunge after. Feel alive again.

Despite the technological and cultural threats, we are not powerless. We can make the most of the gift we got as humans. We can’t stop the waves, but we can learn to surf.

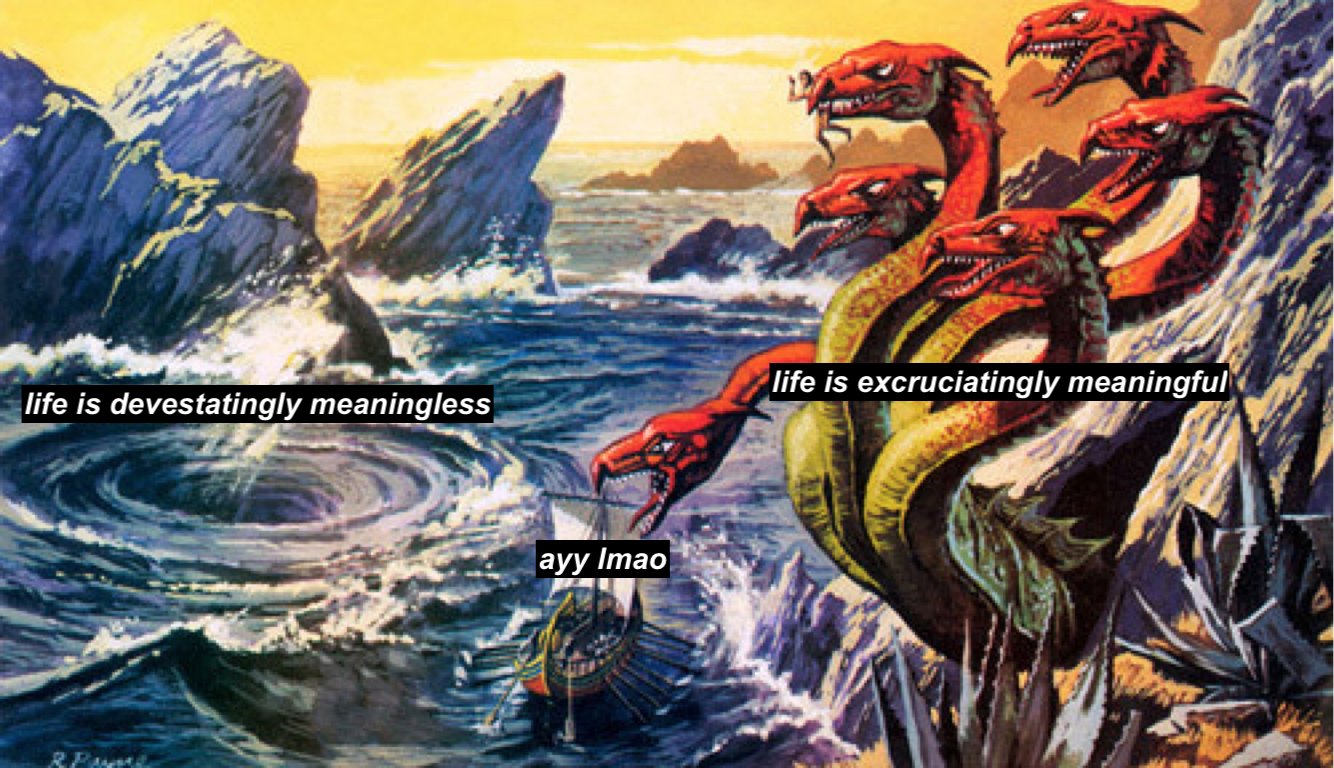

Like Visakan says, all of philosophy is basically steering a ship between “everything is excruciatingly meaningful” and “everything is devastatingly meaningless”.

I know which side I want to steer my ship towards.

ayy lmao.